Author

Author

|

Topic: Singularity sighted. (Read 37871 times) |

|

Fritz

Adept

Gender:

Posts: 1746

Reputation: 7.64

Rate Fritz

|

|

Re:Singularity sighted.

« Reply #60 on: 2015-05-02 15:59:32 » |

|

Cool Singularity Alert: Synthetic DNA and Tech fusion: If we build 'em they can take over the mess we've made of our species and our home. I wish 'em luck.

Cheers

Fritz

Researchers cradle silver nanoclusters inside synthetic DNA to create a programmed, tunable fluorescent array

Source: Phys.org

Author: Julie Cohen

Date: 2015.04.23

The silver used by Beth Gwinn's research group at UC Santa Barbara has value far beyond its worth as a commodity, even though it's used in very small amounts.

The group works with the precious metal to create nanoscale silver clusters with unique fluorescent properties. These properties are important for a variety of sensing applications including biomedical imaging.

The team's latest research is published in a featured article in this month's issue of ACS Nano, a journal of the American Chemical Society. The scientists positioned silver clusters at programmed sites on a nanoscale breadboard, a construction base for prototyping of photonics and electronics. "Our 'breadboard' is a DNA nanotube with spaces programmed 7 nanometers apart," said lead author Stacy Copp, a graduate student in UCSB's Department of Physics.

"Due to the strong interactions between DNA and metal atoms, it's quite challenging to design DNA breadboards that keep their desired structure when these new interactions are introduced," said Gwinn, a professor in UCSB's Department of Physics. "Stacy's work has shown that not only can the breadboard keep its shape when silver clusters are present, it can also position arrays of many hundreds of clusters containing identical numbers of silver atoms—a remarkable degree of control that is promising for realizing new types of nanoscale photonics."

The results of this novel form of DNA nanotechnology address the difficulty of achieving uniform particle sizes and shapes. "In order to make photonic arrays using a self-assembly process, you have to be able to program the positions of the clusters you are putting on the array," Copp explained. "This paper is the first demonstration of this for silver clusters."

The colors of the clusters are largely determined by the DNA sequence that wraps around them and controls their size. To create a positionable silver cluster with DNA-programmed color, the researchers engineered a piece of DNA with two parts: one that wraps around the cluster and the other that attaches to the DNA nanotube. "Sticking out of the nanotube are short DNA strands that act as docking stations for the silver clusters' host strands," Copp explained.

The research group's team of graduate and undergraduate researchers is able to tune the silver clusters to fluoresce in a wide range of colors, from blue-green all the way to the infrared—an important achievement because tissues have windows of high transparency in the infrared. According to Copp, biologists are always looking for better dye molecules or other infrared-emitting objects to use for imaging through a tissue.

"People are already using similar silver cluster technologies to sense mercury ions, small pieces of DNA that are important for human diseases, and a number of other biochemical molecules," Copp said. "But there's a lot more you can learn by putting the silver clusters on a breadboard instead of doing experiments in a test tube. You get more information if you can see an array of different molecules all at the same time."

The modular design presented in this research means that its step-by-step process can be easily generalized to silver clusters of different sizes and to many types of DNA scaffolds. The paper walks readers through the process of creating the DNA that stabilizes silver clusters. This newly outlined protocol offers investigators a new degree of control and flexibility in the rapidly expanding field of nanophotonics.

The overarching theme of Copp's research is to understand how DNA controls the size and shape of the silver clusters themselves and then figure out how to use the fact that these silver clusters are stabilized by DNA in order to build nanoscale arrays.

"It's challenging because we don't really understand the interactions between silver and DNA just by itself," Copp said. "So part of what I've been doing is using big datasets to create a bank of working sequences that we've published so other scientists can use them. We want to give researchers tools to design these types of structures intelligently instead of just having to guess."

The paper's acknowledgements include a dedication to "those students who lost their lives in the Isla Vista tragedy and to the courage of the first responders, whose selfless actions saved many lives."

Explore further: Scientists use nanoscale building blocks and DNA 'glue' to shape 3-D superlattices

More information: ACS Nano, pubs.acs.org/doi/abs/10.1021/nn506322q

Read more at: http://phys.org/news/2015-04-cradle-silver-nanoclusters-synthetic-dna.html#jCp

|

Where there is the necessary technical skill to move mountains, there is no need for the faith that moves mountains -anon-

|

|

|

Fritz

Adept

Gender:

Posts: 1746

Reputation: 7.64

Rate Fritz

|

|

Re:Singularity sighted.

« Reply #61 on: 2015-05-02 16:10:20 » |

|

This gets us up todate on the Synthetic Bio side I think. Now we need Lucifer to get us up to speed on the AI world.

Cheers

Fritz

Design and build of synthetic DNA goes back to 'BASIC'

Source: Imperial College

Author: Hayley Dunning

Date: 2015.03.10

A new technique for creating artificial DNA that is faster, more accurate and more flexible than existing methods has been developed by scientists.

The new system – called BASIC – is a major advance for the field of synthetic biology, which designs and builds organisms able to make useful products such as medicines, energy, food, materials and chemicals.

To engineer new organisms, scientists build artificial genes from individual molecules and then put these together to create larger genetic constructs which, when inserted into a cell, will create the required product. Various attempts have been made to standardise the design and assembly process but, until now, none have been completely successful.

BASIC, created by researchers from Imperial’s Centre for Synthetic Biology & Innovation, combines the best features of the most popular methods while overcoming their limitations, creating a system that is fast, flexible and accurate. The new technique should enable greater advances in research and could offer industry a way to automate the design and manufacture of synthetic DNA.

Dr Geoff Baldwin, from Imperial’s Department of Life Sciences, explains: “BASIC uses standardised parts which, like Lego, have the same joining device, so parts will fit together in any order.

“Unlike some systems that can only join two parts at a time, forcing the gene to be built in several, time consuming steps, BASIC enables multiple parts to be joined together at once. It is also 99 per cent accurate, compared to bespoke designs which usually have an accuracy of around 70 per cent.”

BASIC is fast to use because it can draw on a large database of standardised parts, which can be produced in bulk and stored for use as required, rather than creating new parts each time.

Industrial scale

The standardisation and accuracy of the process means that it could be used on an industrial scale. BASIC is already set to be used in a high throughput automated process in SynbiCITE, the innovation and knowledge centre (IKC) based at Imperial which is promoting the adoption of synthetic biology by industry. Two industrial partners – Dr Reddys and Isogenica – are also already making use of BASIC in their research laboratories.

Professor Paul Freemont, co-Director of the Centre for Synthetic Biology & Innovation, says: “This system is an exciting development for the field of synthetic biology. If we are to make significant advances in this area of research, it is vital to be able to assemble DNA rapidly in multiple variations, and BASIC gives us the means to do this.”

Professor Stephen Chambers, CEO of SynbiCITE, says: “The way BASIC has been designed lends itself very well to automation and high throughput processes, which is the future of synthetic biology. If innovations in the field are to be translated into the marketplace, we need the capability to do things at larger scale – creating larger numbers of constructs so we have more opportunities to find something unique and valuable. BASIC is a foundational technology which will enable us to do this and will be one of the first protocols to be used in our new, fully automated platform for synthetic biology, set to begin production later this year.”

|

Where there is the necessary technical skill to move mountains, there is no need for the faith that moves mountains -anon-

|

|

|

Fritz

Adept

Gender:

Posts: 1746

Reputation: 7.64

Rate Fritz

|

|

Re:Singularity sighted.

« Reply #62 on: 2015-05-11 15:47:19 » |

|

Seems we are still not ready to embrace AI; for killing each other. We like to kill each other with the personal touch.

Cheers

Fritz

The human touch is optional in robot wars

Source: The Guardian

Author: Calum Chace

Date: 2015.04.16

Your editorial (Weapons systems are becoming autonomous entities. Human beings must take responsibility, 14 April) argues that killer robots should always remain under human control, because robots can never be morally responsible.

This may not be true if and when we create machines whose cognitive abilities match or exceed those of humans in every respect. Surveys indicate that around 50% of AI researchers think that could happen before 2050.

But long before then we will face other dilemmas. If wars can be fought by robots, would that not be better than human slaughter? And when robots can discriminate between combatants and civilian bystanders better than human soldiers, who should pull the trigger?

Calum Chace, author of Pandora’s Brain

Steyning, West Sussex

|

Where there is the necessary technical skill to move mountains, there is no need for the faith that moves mountains -anon-

|

|

|

Fritz

Adept

Gender:

Posts: 1746

Reputation: 7.64

Rate Fritz

|

|

Re:Singularity sighted.

« Reply #63 on: 2015-05-21 13:38:22 » |

|

Well if the UK Telegraph is talking about it we know its is certain :-) ... interesting though that no acknowledgement to the reality that, robotic systems are already an integral part of everyday life.

Cheers

Fritz

An explosion in artificial intelligence has sent us hurtling towards a post-human future, warns Martin Rees

Source: Telegraph UK

Author: Martin Rees

Date: 2015.05.21

In Davos a few years ago, I met a well-known Indian tycoon. Knowing I had the title Astronomer Royal, he asked: “Do you do the Queen’s horoscopes?” I responded, with a straight face: “If she wanted one, I’m the person she’d ask.” He then seemed eager to hear my predictions. I told him that markets would fluctuate and that there would be trouble in the Middle East. He paid rapt attention to these insights. But I then came clean. I said I was just an astronomer, not an astrologer. He immediately lost all interest in my predictions. And rightly so: scientists are rotten forecasters – worse, often, than writers of science fiction.

Nevertheless, 12 years ago, I wrote a book that I entitled Our Final Century? My publisher deleted the question-mark. The American publishers changed the title to Our Final Hour – Americans seek instant (dis)gratification. My theme was this: our Earth is 45 million centuries old, but this century is special. It’s the first when one species – ours – can determine the biosphere’s fate.

In the years since, a few forecasts have somewhat firmed up: the world is becoming more crowded – and warmer. There will be about 2 billion more people in 2050, and their collective “footprint” will threaten our finite planet’s ecology unless we can achieve more efficient use of energy and land. But we can’t predict the path of future technology that far ahead. Today’s smartphones would have seemed magic even 20 years ago, so in looking several decades ahead we must keep our minds open to breakthroughs that may now seem like science fiction. These will offer great hopes, but also great fears.

Society is more interconnected than ever, and consequently more vulnerable. We depend on elaborate networks: electric-power grids, just-in-time delivery, satnav, globally dispersed manufacturing, and so forth. Can we be sure that these networks are resilient enough to rule out catastrophic disruptions cascading through the system – real-world analogues of the 2008 financial crash? London would be instantly paralysed without electricity. Supermarket shelves would soon be bare if supply chains were disrupted. Air travel can spread a pandemic worldwide in days, causing havoc in the megacities of the developing world. And social media can amplify panic and rumour, literally at the speed of light.

The worry isn’t just accidental breakdowns. Malicious events can have catastrophic consequences. Cyber-sabotage efforts, such as “Stuxnet”, and frequent hacking of financial institutions have highlighted these concerns. Small groups – and even individuals – are more empowered than ever before.

And there are downsides to the huge advances in biotech, despite the bright prospects these offer for medicine and agriculture. There were reports last month that Chinese researchers had been gene-editing human embryos using a new technique called CRISPR, raising controversial ethical issues about “designer babies”. But more disquieting are the experiments at the University of Wisconsin and in the Netherlands that show it’s surprisingly easy to make an influenza virus more virulent and transmissible. Last October, the US federal government decided to cease funding these so-called “gain of function” experiments.

A humanoid robot (PHOTO: Wellcome Images)

We held a debate on this recently in Cambridge. Those supporting “gain of function” research highlighted the need to study viruses in order to stay one step ahead of natural mutations. Others viewed the techniques as a scary portent of what’s to come. What would happen, for instance, if an ebola virus were modified to be transmissible through the air? And they worried that the risk of failure to contain the pathogens within the lab is too high to justify the knowledge gained.

There is a contrast here with the (also real) dangers of nuclear technology. Nuclear installations are sufficiently large-scale for bodies such as the International Atomic Energy Agency to regulate them effectively. It is hard to make a clandestine H-bomb. In contrast, millions with the capability to misuse biotech will have access to biomedical labs, just as millions can misuse cybertech today.

Indeed, biohacking is burgeoning even as a hobby and competitive game. (For instance, there is competition to develop plants that glow in the dark, and eventually make trees that could replace street lights.) The physicist Freeman Dyson foresees a time when children will be able to design and create new organisms just as routinely as his generation played with chemistry sets. I’d guess that this is comfortably beyond the “SF fringe”, but were even part of this scenario to come about, our ecology (and even our species) surely would not long survive unscathed.

Not all those with “bio” expertise will be balanced and rational. My worst nightmare is an “eco-fanatic”, empowered by the biohacking expertise that may be routine by 2050, who thinks that “Gaia” can only be saved if the human population is reduced. The global village will have its village idiots, and they will have global range.

In the early days of “molecular biology”, a group of academic scientists formulated the “Asilomar Declaration”, advocating a moratorium on certain types of experiments and setting up guidelines. Such self-policing worked back in the Seventies. But the field is now far more global, more competitive, and commercial pressures are stronger. So it’s doubtful that regulations imposed on prudential or ethical grounds could be enforced worldwide, any more than the drug laws can. So this is a real anxiety – number one in my estimation – and will raise the tension between privacy, freedom and security.

What about other future technologies — computers and robotics, for instance? There is nothing new about machines that can surpass our mental abilities in special areas. Even the pocket calculators of the Seventies could do arithmetic better than us. In the Nineties, IBM’s “Deep Blue” chess-playing computer beat Garry Kasparov, then the world champion. More recently, another IBM computer won a television game show that required wide general knowledge and the ability to respond to questions in the style of crossword clues.

We’re witnessing a momentous speed-up in artificial intelligence (AI) – in the power of machines to learn, communicate and interact with us. Computers don’t learn like we do: they use “brute force” methods. They learn to translate from foreign languages by reading multilingual versions of, for example, millions of pages of EU documents (they never get bored). They learn to recognise dogs, cats and human faces by crunching through millions of images — not the way a baby learns.

• Sir Martin Rees on why the discovery of life on Mars would change everything

Deep Mind, a London company that Google recently bought for £400 million, created a machine that can figure out the rules of all the old Atari games without being told, and then play them better than humans.

It’s still hard for AI to interact with the everyday world. Robots remain clumsy – they can’t tie your shoelaces or cut your toenails. But sensor technology, speech recognition, information searches and so forth are advancing apace.

Google’s driverless car has already covered hundreds of thousands of miles. But can it cope with emergencies? For instance, if an obstruction suddenly appears on a busy road, can the robotic “driver” discriminate whether it’s a paper bag, a dog or a child? The likely answer is that it won’t cope as well as a really good driver, but will be better than the average driver — machine errors may occur but not as often as human error. The roads will be safer. But when accidents occur they will create a legal minefield. Who should be held responsible — the “driver”, the owner, or the designer?

And what about the military use of autonomous drones? Can they be trusted to seek out a targeted individual and decide whether to deploy their weapon? Who has the moral responsibility then?

AI will take over a wider range of jobs – not just manual work but accountancy, routine legal work, medical diagnostics and surgery. And the big question is then: will AI be like earlier disruptive technologies – the car, for instance – which created as many jobs as they destroyed? Or is it really different this time?

During this century, our society will be increasingly transformed by computers. But will they remain idiots savants or will they display near-human all-round capabilities? If robots could observe and interpret their environment as adeptly as we do, they would be perceived as intelligent beings that we could relate to. Would we then have a responsibility to them? Should we care if they are frustrated or bored? Maybe we’d have no more reason to disparage them as zombies than to regard other people in that way.

Experts disagree on how long it will take before machines achieve general-purpose human level intelligence. Some say 25 years. Others say “never”. The median guess in a recent survey was about 50 years.

Some of those with the strongest credentials think that the AI field is advancing so fast that it already needs guidelines for “responsible innovation”, just as biotech does.

And there is disagreement about the route towards human-level intelligence. Some think we should emulate nature and reverse-engineer the human brain. Others say that’s a misguided approach – like designing a flying machine by copying how birds flap their wings. But it’s clear that once a threshold is crossed, there will be an intelligence explosion. That’s because electronics is a million times faster than the transmission of signals in the brain; and because computers can network and exchange information much faster than we can by speaking.

In the Sixties, the British mathematician I J Good, who worked at Bletchley Park with Alan Turing, pointed out that a super-intelligent robot (were it sufficiently versatile) could be the last invention that humans need ever make. Once machines have surpassed human capabilities, they could themselves design and assemble a new generation of even more powerful machines — triggering a real “intelligence explosion”. Or could humans transcend biology by merging with computers, maybe losing their individuality and evolving into a common consciousness? In old-style spiritualist parlance, they would “go over to the other side”.

The most prominent evangelist for runaway super-intelligence – so-called “'singularity” – is Ray Kurzweil, now working at Google. He thinks this could happen within 25 years. But he is worried that he may not live that long. So he takes dozens of pills each day, and if he dies he wants his body frozen until this nirvana is reached.

I was once interviewed by a group of “cryonic” enthusiasts in California called the “society for the abolition of involuntary death”. They will freeze your body, so that when immortality is on offer you can be resurrected. I said I’d rather end my days in an English churchyard than a Californian refrigerator. They derided me as a “deathist”. (I was surprised later to find that three Oxford academics were cryonic enthusiasts. Two have paid full whack; a third has taken the cut-price option of just wanting his head frozen.)

Let me briefly deploy an astronomical perspective and speculate about the really far future – the post-human era. There are chemical and metabolic limits to the size and processing power of organic brains. Maybe humans are close to these limits already. But there are no such constraints on silicon-based computers (still less, perhaps, quantum computers): for these, the potential for further development could be as dramatic as the evolution from monocellular organisms to humans. So, by any definition of “thinking”, the amount and intensity that’s done by organic human-type brains will, in the far future, be utterly swamped by the cerebrations of AI. Moreover, the Earth’s biosphere in which organic life has symbiotically evolved is not a constraint for advanced AI. Indeed, it is far from optimal – interplanetary and interstellar space will be the preferred arena where robotic fabricators will have the grandest scope for construction, and where non-biological “brains” may develop insights as far beyond our imaginings as string theory is for a mouse.

Abstract thinking by biological brains has underpinned the emergence of all culture and science. But this activity – spanning tens of millennia at most – will be a brief precursor to the more powerful intellects of the inorganic post-human era. So, in the far future, it won’t be the minds of humans, but those of machines, that will most fully understand the cosmos – and it will be the actions of autonomous machines that will most drastically change our world, and perhaps what lies beyond.

Martin Rees is the Astronomer Royal. He will be speaking at the Hay Festival on Sunday May 24

|

Where there is the necessary technical skill to move mountains, there is no need for the faith that moves mountains -anon-

|

|

|

Fritz

Adept

Gender:

Posts: 1746

Reputation: 7.64

Rate Fritz

|

|

CRISPR-CAS9: Build'em before they come

« Reply #64 on: 2015-08-10 11:13:33 » |

|

CRISPR-CAS9 : If this is not part of your vocabulary, you are not awed and terrified all at the same time, read on; this is literally a life changer. Between designer children, unlimited food, bio weapons that will wipe out everyone that doesn't have blonde hair and blue eyes; it is a Brave New World.

Go to the site; really well done transition to web friendly magazine reading; good implementation of a 'wordpressish' website.

Cheers

Fritz

Easy DNA Editing Will Remake the World. Buckle Up.

Source: WIRED

Author: Amy Maxmen

Date: 2015.07.24

Jennifer Doudna did early work on Crispr. Photo by: Bryan Derballa

Spiny grass and scraggly pines creep amid the arts-and-crafts buildings of the Asilomar Conference Grounds, 100 acres of dune where California's Monterey Peninsula hammerheads into the Pacific. It's a rugged landscape, designed to inspire people to contemplate their evolving place on Earth. So it was natural that 140 scientists gathered here in 1975 for an unprecedented conference.

They were worried about what people called “recombinant DNA,” the manipulation of the source code of life. It had been just 22 years since James Watson, Francis Crick, and Rosalind Franklin described what DNA was—deoxyribonucleic acid, four different structures called bases stuck to a backbone of sugar and phosphate, in sequences thousands of bases long. DNA is what genes are made of, and genes are the basis of heredity.

Preeminent genetic researchers like David Baltimore, then at MIT, went to Asilomar to grapple with the implications of being able to decrypt and reorder genes. It was a God-like power—to plug genes from one living thing into another. Used wisely, it had the potential to save millions of lives. But the scientists also knew their creations might slip out of their control. They wanted to consider what ought to be off-limits.

By 1975, other fields of science—like physics—were subject to broad restrictions. Hardly anyone was allowed to work on atomic bombs, say. But biology was different. Biologists still let the winding road of research guide their steps. On occasion, regulatory bodies had acted retrospectively—after Nuremberg, Tuskegee, and the human radiation experiments, external enforcement entities had told biologists they weren't allowed to do that bad thing again. Asilomar, though, was about establishing prospective guidelines, a remarkably open and forward-thinking move.

Subscribe to WIRED Photo by: Richard Mosse

At the end of the meeting, Baltimore and four other molecular biologists stayed up all night writing a consensus statement. They laid out ways to isolate potentially dangerous experiments and determined that cloning or otherwise messing with dangerous pathogens should be off-limits. A few attendees fretted about the idea of modifications of the human “germ line”—changes that would be passed on from one generation to the next—but most thought that was so far off as to be unrealistic. Engineering microbes was hard enough. The rules the Asilomar scientists hoped biology would follow didn't look much further ahead than ideas and proposals already on their desks.

Earlier this year, Baltimore joined 17 other researchers for another California conference, this one at the Carneros Inn in Napa Valley. “It was a feeling of déjà vu,” Baltimore says. There he was again, gathered with some of the smartest scientists on earth to talk about the implications of genome engineering.

The stakes, however, have changed. Everyone at the Napa meeting had access to a gene-editing technique called Crispr-Cas9. The first term is an acronym for “clustered regularly interspaced short palindromic repeats,” a description of the genetic basis of the method; Cas9 is the name of a protein that makes it work. Technical details aside, Crispr-Cas9 makes it easy, cheap, and fast to move genes around—any genes, in any living thing, from bacteria to people. “These are monumental moments in the history of biomedical research,” Baltimore says. “They don't happen every day.”

Using the three-year-old technique, researchers have already reversed mutations that cause blindness, stopped cancer cells from multiplying, and made cells impervious to the virus that causes AIDS. Agronomists have rendered wheat invulnerable to killer fungi like powdery mildew, hinting at engineered staple crops that can feed a population of 9 billion on an ever-warmer planet. Bioengineers have used Crispr to alter the DNA of yeast so that it consumes plant matter and excretes ethanol, promising an end to reliance on petrochemicals. Startups devoted to Crispr have launched. International pharmaceutical and agricultural companies have spun up Crispr R&D. Two of the most powerful universities in the US are engaged in a vicious war over the basic patent. Depending on what kind of person you are, Crispr makes you see a gleaming world of the future, a Nobel medallion, or dollar signs.

The technique is revolutionary, and like all revolutions, it's perilous. Crispr goes well beyond anything the Asilomar conference discussed. It could at last allow genetics researchers to conjure everything anyone has ever worried they would—designer babies, invasive mutants, species-specific bioweapons, and a dozen other apocalyptic sci-fi tropes. It brings with it all-new rules for the practice of research in the life sciences. But no one knows what the rules are—or who will be the first to break them.

In a way, humans were genetic engineers long before anyone knew what a gene was. They could give living things new traits—sweeter kernels of corn, flatter bulldog faces—through selective breeding. But it took time, and it didn't always pan out. By the 1930s refining nature got faster. Scientists bombarded seeds and insect eggs with x-rays, causing mutations to scatter through genomes like shrapnel. If one of hundreds of irradiated plants or insects grew up with the traits scientists desired, they bred it and tossed the rest. That's where red grapefruits came from, and most barley for modern beer.

Genome modification has become less of a crapshoot. In 2002, molecular biologists learned to delete or replace specific genes using enzymes called zinc-finger nucleases; the next-generation technique used enzymes named TALENs.

Yet the procedures were expensive and complicated. They only worked on organisms whose molecular innards had been thoroughly dissected—like mice or fruit flies. Genome engineers went on the hunt for something better.

Scientists have used it to render wheat invulnerable to killer fungi. Such crops could feed billions of people.

As it happened, the people who found it weren't genome engineers at all. They were basic researchers, trying to unravel the origin of life by sequencing the genomes of ancient bacteria and microbes called Archaea (as in archaic), descendants of the first life on Earth. Deep amid the bases, the As, Ts, Gs, and Cs that made up those DNA sequences, microbiologists noticed recurring segments that were the same back to front and front to back—palindromes. The researchers didn't know what these segments did, but they knew they were weird. In a branding exercise only scientists could love, they named these clusters of repeating palindromes Crispr.

Then, in 2005, a microbiologist named Rodolphe Barrangou, working at a Danish food company called Danisco, spotted some of those same palindromic repeats in Streptococcus thermophilus, the bacteria that the company uses to make yogurt and cheese. Barrangou and his colleagues discovered that the unidentified stretches of DNA between Crispr's palindromes matched sequences from viruses that had infected their S. thermophilus colonies. Like most living things, bacteria get attacked by viruses—in this case they're called bacteriophages, or phages for short. Barrangou's team went on to show that the segments served an important role in the bacteria's defense against the phages, a sort of immunological memory. If a phage infected a microbe whose Crispr carried its fingerprint, the bacteria could recognize the phage and fight back. Barrangou and his colleagues realized they could save their company some money by selecting S. thermophilus species with Crispr sequences that resisted common dairy viruses.

As more researchers sequenced more bacteria, they found Crisprs again and again—half of all bacteria had them. Most Archaea did too. And even stranger, some of Crispr's sequences didn't encode the eventual manufacture of a protein, as is typical of a gene, but instead led to RNA—single-stranded genetic material. (DNA, of course, is double-stranded.)

That pointed to a new hypothesis. Most present-day animals and plants defend themselves against viruses with structures made out of RNA. So a few researchers started to wonder if Crispr was a primordial immune system. Among the people working on that idea was Jill Banfield, a geomicrobiologist at UC Berkeley, who had found Crispr sequences in microbes she collected from acidic, 110-degree water from the defunct Iron Mountain Mine in Shasta County, California. But to figure out if she was right, she needed help.

Luckily, one of the country's best-known RNA experts, a biochemist named Jennifer Doudna, worked on the other side of campus in an office with a view of the Bay and San Francisco's skyline. It certainly wasn't what Doudna had imagined for herself as a girl growing up on the Big Island of Hawaii. She simply liked math and chemistry—an affinity that took her to Harvard and then to a postdoc at the University of Colorado. That's where she made her initial important discoveries, revealing the three-dimensional structure of complex RNA molecules that could, like enzymes, catalyze chemical reactions.

The mine bacteria piqued Doudna's curiosity, but when Doudna pried Crispr apart, she didn't see anything to suggest the bacterial immune system was related to the one plants and animals use. Still, she thought the system might be adapted for diagnostic tests.

Banfield wasn't the only person to ask Doudna for help with a Crispr project. In 2011, Doudna was at an American Society for Microbiology meeting in San Juan, Puerto Rico, when an intense, dark-haired French scientist asked her if she wouldn't mind stepping outside the conference hall for a chat. This was Emmanuelle Charpentier, a microbiologist at Ume˚a University in Sweden.

As they wandered through the alleyways of old San Juan, Charpentier explained that one of Crispr's associated proteins, named Csn1, appeared to be extraordinary. It seemed to search for specific DNA sequences in viruses and cut them apart like a microscopic multitool. Charpentier asked Doudna to help her figure out how it worked. “Somehow the way she said it, I literally—I can almost feel it now—I had this chill down my back,” Doudna says. “When she said ‘the mysterious Csn1’ I just had this feeling, there is going to be something good here.”

Back in Sweden, Charpentier kept a colony of Streptococcus pyogenes in a biohazard chamber. Few people want S. pyogenes anywhere near them. It can cause strep throat and necrotizing fasciitis—flesh-eating disease. But it was the bug Charpentier worked with, and it was in S. pyogenes that she had found that mysterious yet mighty protein, now renamed Cas9. Charpentier swabbed her colony, purified its DNA, and FedExed a sample to Doudna.

Working together, Charpentier’s and Doudna’s teams found that Crispr made two short strands of RNA and that Cas9 latched onto them. The sequence of the RNA strands corresponded to stretches of viral DNA and could home in on those segments like a genetic GPS. And when the Crispr-Cas9 complex arrives at its destination, Cas9 does something almost magical: It changes shape, grasping the DNA and slicing it with a precise molecular scalpel.

Jennifer Doudna did early work on Crispr. Photo by: Bryan Derballa

Here’s what’s important: Once they’d taken that mechanism apart, Doudna’s postdoc, Martin Jinek, combined the two strands of RNA into one fragment—“guide RNA”—that Jinek could program. He could make guide RNA with whatever genetic letters he wanted; not just from viruses but from, as far as they could tell, anything. In test tubes, the combination of Jinek’s guide RNA and the Cas9 protein proved to be a programmable machine for DNA cutting. Compared to TALENs and zinc-finger nucleases, this was like trading in rusty scissors for a computer-controlled laser cutter. “I remember running into a few of my colleagues at Berkeley and saying we have this fantastic result, and I think it’s going to be really exciting for genome engineering. But I don’t think they quite got it,” Doudna says. “They kind of humored me, saying, ‘Oh, yeah, that’s nice.’”

On June 28, 2012, Doudna’s team published its results in Science. In the paper and in an earlier corresponding patent application, they suggest their technology could be a tool for genome engineering. It was elegant and cheap. A grad student could do it.

The finding got noticed. In the 10 years preceding 2012, 200 papers mentioned Crispr. By 2014 that number had more than tripled. Doudna and Charpentier were each recently awarded the $3 million 2015 Breakthrough Prize. Time magazine listed the duo among the 100 most influential people in the world. Nobody was just humoring Doudna anymore.

Most Wednesday afternoons, Feng Zhang, a molecular biologist at the Broad Institute of MIT and Harvard, scans the contents of Science as soon as they are posted online. In 2012, he was working with Crispr-Cas9 too. So when he saw Doudna and Charpentier's paper, did he think he'd been scooped? Not at all. “I didn't feel anything,” Zhang says. “Our goal was to do genome editing, and this paper didn't do it.” Doudna's team had cut DNA floating in a test tube, but to Zhang, if you weren't working with human cells, you were just screwing around.

That kind of seriousness is typical for Zhang. At 11, he moved from China to Des Moines, Iowa, with his parents, who are engineers—one computer, one electrical. When he was 16, he got an internship at the gene therapy research institute at Iowa Methodist hospital. By the time he graduated high school he'd won multiple science awards, including third place in the Intel Science Talent Search.

When Doudna talks about her career, she dwells on her mentors; Zhang lists his personal accomplishments, starting with those high school prizes. Doudna seems intuitive and has a hands-off management style. Zhang … pushes. We scheduled a video chat at 9:15 pm, and he warned me that we'd be talking data for a couple of hours. “Power-nap first,” he said.

If new genes that wipe out malaria also make mosquitoes go extinct, what will bats eat?

Zhang got his job at the Broad in 2011, when he was 29. Soon after starting there, he heard a speaker at a scientific advisory board meeting mention Crispr. “I was bored,” Zhang says, “so as the researcher spoke, I just Googled it.” Then he went to Miami for an epigenetics conference, but he hardly left his hotel room. Instead Zhang spent his time reading papers on Crispr and filling his notebook with sketches on ways to get Crispr and Cas9 into the human genome. “That was an extremely exciting weekend,” he says, smiling.

Just before Doudna's team published its discovery in Science, Zhang applied for a federal grant to study Crispr-Cas9 as a tool for genome editing. Doudna's publication shifted him into hyperspeed. He knew it would prompt others to test Crispr on genomes. And Zhang wanted to be first.

Even Doudna, for all of her equanimity, had rushed to report her finding, though she hadn't shown the system working in human cells. “Frankly, when you have a result that is exciting,” she says, “one does not wait to publish it.”

In January 2013, Zhang's team published a paper in Science showing how Crispr-Cas9 edits genes in human and mouse cells. In the same issue, Harvard geneticist George Church edited human cells with Crispr too. Doudna's team reported success in human cells that month as well, though Zhang is quick to assert that his approach cuts and repairs DNA better.

That detail matters because Zhang had asked the Broad Institute and MIT, where he holds a joint appointment, to file for a patent on his behalf. Doudna had filed her patent application—which was public information—seven months earlier. But the attorney filing for Zhang checked a box on the application marked “accelerate” and paid a fee, usually somewhere between $2,000 and $4,000. A series of emails followed between agents at the US Patent and Trademark Office and the Broad's patent attorneys, who argued that their claim was distinct.

A little more than a year after those human-cell papers came out, Doudna was on her way to work when she got an email telling her that Zhang, the Broad Institute, and MIT had indeed been awarded the patent on Crispr-Cas9 as a method to edit genomes. “I was quite surprised,” she says, “because we had filed our paperwork several months before he had.”

The Broad win started a firefight. The University of California amended Doudna's original claim to overlap Zhang's and sent the patent office a 114-page application for an interference proceeding—a hearing to determine who owns Crispr—this past April. In Europe, several parties are contesting Zhang's patent on the grounds that it lacks novelty. Zhang points to his grant application as proof that he independently came across the idea. He says he could have done what Doudna's team did in 2012, but he wanted to prove that Crispr worked within human cells. The USPTO may make its decision as soon as the end of the year.

The stakes here are high. Any company that wants to work with anything other than microbes will have to license Zhang's patent; royalties could be worth billions of dollars, and the resulting products could be worth billions more. Just by way of example: In 1983 Columbia University scientists patented a method for introducing foreign DNA into cells, called cotransformation. By the time the patents expired in 2000, they had brought in $790 million in revenue.

It's a testament to Crispr's value that despite the uncertainty over ownership, companies based on the technique keep launching. In 2011 Doudna and a student founded a company, Caribou, based on earlier Crispr patents; the University of California offered Caribou an exclusive license on the patent Doudna expected to get. Caribou uses Crispr to create industrial and research materials, potentially enzymes in laundry detergent and laboratory reagents. To focus on disease—where the long-term financial gain of Crispr-Cas9 will undoubtedly lie—Caribou spun off another biotech company called Intellia Therapeutics and sublicensed the Crispr-Cas9 rights. Pharma giant Novartis has invested in both startups. In Switzerland, Charpentier cofounded Crispr Therapeutics. And in Cambridge, Massachusetts, Zhang, George Church, and several others founded Editas Medicine, based on licenses on the patent Zhang eventually received.

Thus far the four companies have raised at least $158 million in venture capital.

Any gene typically has just a 50–50 chance of getting passed on. Either the offspring gets a copy from Mom or a copy from Dad. But in 1957 biologists found exceptions to that rule, genes that literally manipulated cell division and forced themselves into a larger number of offspring than chance alone would have allowed.

A decade ago, an evolutionary geneticist named Austin Burt proposed a sneaky way to use these “selfish genes.” He suggested tethering one to a separate gene—one that you wanted to propagate through an entire population. If it worked, you'd be able to drive the gene into every individual in a given area. Your gene of interest graduates from public transit to a limousine in a motorcade, speeding through a population in flagrant disregard of heredity's traffic laws. Burt suggested using this “gene drive” to alter mosquitoes that spread malaria, which kills around a million people every year. It's a good idea. In fact, other researchers are already using other methods to modify mosquitoes to resist the Plasmodium parasite that causes malaria and to be less fertile, reducing their numbers in the wild. But engineered mosquitoes are expensive. If researchers don't keep topping up the mutants, the normals soon recapture control of the ecosystem.

Push those modifications through with a gene drive and the normal mosquitoes wouldn't stand a chance. The problem is, inserting the gene drive into the mosquitoes was impossible. Until Crispr-Cas9 came along.

Emmanuelle Charpentier did early work on Crispr. Photo by: Baerbel Schmidt

Today, behind a set of four locked and sealed doors in a lab at the Harvard School of Public Health, a special set of mosquito larvae of the African species Anopheles gambiae wriggle near the surface of shallow tubs of water. These aren't normal Anopheles, though. The lab is working on using Crispr to insert malaria-resistant gene drives into their genomes. It hasn't worked yet, but if it does … well, consider this from the mosquitoes' point of view. This project isn't about reengineering one of them. It's about reengineering them all.

Kevin Esvelt, the evolutionary engineer who initiated the project, knows how serious this work is. The basic process could wipe out any species. Scientists will have to study the mosquitoes for years to make sure that the gene drives can't be passed on to other species of mosquitoes. And they want to know what happens to bats and other insect-eating predators if the drives make mosquitoes extinct. “I am responsible for opening a can of worms when it comes to gene drives,” Esvelt says, “and that is why I try to ensure that scientists are taking precautions and showing themselves to be worthy of the public's trust—maybe we're not, but I want to do my damnedest to try.”

Esvelt talked all this over with his adviser—Church, who also worked with Zhang. Together they decided to publish their gene-drive idea before it was actually successful. They wanted to lay out their precautionary measures, way beyond five nested doors. Gene drive research, they wrote, should take place in locations where the species of study isn't native, making it less likely that escapees would take root. And they also proposed a way to turn the gene drive off when an engineered individual mated with a wild counterpart—a genetic sunset clause. Esvelt filed for a patent on Crispr gene drives, partly, he says, to block companies that might not take the same precautions.

Within a year, and without seeing Esvelt's papers, biologists at UC San Diego had used Crispr to insert gene drives into fruit flies—they called them “mutagenic chain reactions.” They had done their research in a chamber behind five doors, but the other precautions weren't there.Church said the San Diego researchers had gone “a step too far”—big talk from a scientist who says he plans to use Crispr to bring back an extinct woolly mammoth by deriving genes from frozen corpses and injecting them into elephant embryos. (Church says tinkering with one woolly mammoth is way less scary than messing with whole populations of rapidly reproducing insects. “I'm afraid of everything,” he says. “I encourage people to be as creative in thinking about the unintended consequences of their work as the intended.”)

Ethan Bier, who worked on the San Diego fly study, agrees that gene drives come with risks. But he points out that Esvelt's mosquitoes don't have the genetic barrier Esvelt himself advocates. (To be fair, that would defeat the purpose of a gene drive.) And the ecological barrier, he says, is nonsense. “In Boston you have hot and humid summers, so sure, tropical mosquitoes may not be native, but they can certainly survive,” Bier says. “If a pregnant female got out, she and her progeny could reproduce in a puddle, fly to ships in the Boston Harbor, and get on a boat to Brazil.”

These problems don't end with mosquitoes. One of Crispr's strengths is that it works on every living thing. That kind of power makes Doudna feel like she opened Pandora's box. Use Crispr to treat, say, Huntington's disease—a debilitating neurological disorder—in the womb, when an embryo is just a ball of cells? Perhaps. But the same method could also possibly alter less medically relevant genes, like the ones that make skin wrinkle. “We haven't had the time, as a community, to discuss the ethics and safety,” Doudna says, “and, frankly, whether there is any real clinical benefit of this versus other ways of dealing with genetic disease.”

Researchers in China announced they had used Crispr to edit human embryos.

That's why she convened the meeting in Napa. All the same problems of recombinant DNA that the Asilomar attendees tried to grapple with are still there—more pressing now than ever. And if the scientists don't figure out how to handle them, some other regulatory body might. Few researchers, Baltimore included, want to see Congress making laws about science. “Legislation is unforgiving,” he says. “Once you pass it, it is very hard to undo.”

In other words, if biologists don't start thinking about ethics, the taxpayers who fund their research might do the thinking for them.

All of that only matters if every scientist is on board. A month after the Napa conference, researchers at Sun Yat-sen University in Guangzhou, China, announced they had used Crispr to edit human embryos. Specifically they were looking to correct mutations in the gene that causes beta thalassemia, a disorder that interferes with a person's ability to make healthy red blood cells.

The work wasn't successful—Crispr, it turns out, didn't target genes as well in embryos as it does in isolated cells. The Chinese researchers tried to skirt the ethical implications of their work by using nonviable embryos, which is to say they could never have been brought to term. But the work attracted attention. A month later, the US National Academy of Sciences announced that it would create a set of recommendations for scientists, policymakers, and regulatory agencies on when, if ever, embryonic engineering might be permissible. Another National Academy report will focus on gene drives. Though those recommendations don't carry the weight of law, federal funding in part determines what science gets done, and agencies that fund research around the world often abide by the academy's guidelines.

The truth is, most of what scientists want to do with Crispr is not controversial. For example, researchers once had no way to figure out why spiders have the same gene that determines the pattern of veins in the wings of flies. You could sequence the spider and see that the “wing gene” was in its genome, but all you’d know was that it certainly wasn’t designing wings. Now, with less than $100, an ordinary arachnologist can snip the wing gene out of a spider embryo and see what happens when that spider matures. If it’s obvious—maybe its claws fail to form—you’ve learned that the wing gene must have served a different purpose before insects branched off, evolutionarily, from the ancestor they shared with spiders. Pick your creature, pick your gene, and you can bet someone somewhere is giving it a go.

Academic and pharmaceutical company labs have begun to develop Crispr-based research tools, such as cancerous mice—perfect for testing new chemotherapies. A team at MIT, working with Zhang, used Crispr-Cas9 to create, in just weeks, mice that inevitably get liver cancer. That kind of thing used to take more than a year. Other groups are working on ways to test drugs on cells with single-gene variations to understand why the drugs work in some cases and fail in others. Zhang’s lab used the technique to learn which genetic variations make people resistant to a melanoma drug called Vemurafenib. The genes he identified may provide research targets for drug developers.

The real money is in human therapeutics. For example, labs are working on the genetics of so-called elite controllers, people who can be HIV-positive but never develop AIDS. Using Crispr, researchers can knock out a gene called CCR5, which makes a protein that helps usher HIV into cells. You’d essentially make someone an elite controller. Or you could use Crispr to target HIV directly; that begins to look a lot like a cure.

Feng Zhang was awarded the Crispr patent last year. Photo by: Matthew Monteith

Or—and this idea is decades away from execution—you could figure out which genes make humans susceptible to HIV overall. Make sure they don’t serve other, more vital purposes, and then “fix” them in an embryo. It’d grow into a person immune to the virus.

But straight-out editing of a human embryo sets off all sorts of alarms, both in terms of ethics and legality. It contravenes the policies of the US National Institutes of Health, and in spirit at least runs counter to the United Nations’ Universal Declaration on the Human Genome and Human Rights. (Of course, when the US government said it wouldn’t fund research on human embryonic stem cells, private entities raised millions of dollars to do it themselves.) Engineered humans are a ways off—but nobody thinks they’re science fiction anymore.

Even if scientists never try to design a baby, the worries those Asilomar attendees had four decades ago now seem even more prescient. The world has changed. “Genome editing started with just a few big labs putting in lots of effort, trying something 1,000 times for one or two successes,” says Hank Greely, a bioethicist at Stanford. “Now it’s something that someone with a BS and a couple thousand dollars’ worth of equipment can do. What was impractical is now almost everyday. That’s a big deal.”

In 1975 no one was asking whether a genetically modified vegetable should be welcome in the produce aisle. No one was able to test the genes of an unborn baby, or sequence them all. Today swarms of investors are racing to bring genetically engineered creations to market. The idea of Crispr slides almost frictionlessly into modern culture.

In an odd reversal, it’s the scientists who are showing more fear than the civilians. When I ask Church for his most nightmarish Crispr scenario, he mutters something about weapons and then stops short. He says he hopes to take the specifics of the idea, whatever it is, to his grave. But thousands of other scientists are working on Crispr. Not all of them will be as cautious. “You can’t stop science from progressing,” Jinek says. “Science is what it is.” He’s right. Science gives people power. And power is unpredictable.

Amy Maxmen (@amymaxmen) writes about science for National Geographic, Newsweek, and other publications. This is her first article for WIRED.

|

Where there is the necessary technical skill to move mountains, there is no need for the faith that moves mountains -anon-

|

|

|

Fritz

Adept

Gender:

Posts: 1746

Reputation: 7.64

Rate Fritz

|

|

Re:Singularity sighted.

« Reply #65 on: 2015-08-19 11:21:38 » |

|

Stumbled across this great explanation of this thread's title and idea.

Cheers

Fritz

The Singularity is Always Near

Source: Veneer Magazine

Author: Kevin Kelly

Date: 2015.03.24

Although the sense that we are experiencing a singularity-like event with computers and the world wide web is visceral, the current concept of a singularity is not the best explanation for this transformation in progress.

Singularity is a term borrowed from physics to describe a cataclysmic threshold in a black hole. In the canonical usage, it defines the moment an object being pulled into a black hole passes a point beyond which nothing about it, including information, can escape. In other words, although an object’s entry into a black hole is steady and knowable, once it passes this discrete point absolutely nothing about its future can be known. This disruption on the way to infinity is called a singular event — a singularity.

Mathematician and science fiction author Vernor Vinge applied this metaphor to the acceleration of technological change. The power of computers has been increasing at an exponential rate with no end in sight, which led Vinge to an alarming conclusion. In his analysis, at some point not too far away, innovations in computer power would enable us to design computers more intelligent than we are; these smarter computers could design computers yet smarter than themselves, and so on, the loop of computers-making-newer-computers accelerating very quickly towards unimaginable levels of intelligence. This progress in IQ and power, when graphed, generates a rising curve that appears to approach the straight-up vertical limit of infinity. In mathematical terms it resembles the singularity of a black hole, because, as Vinge announced, it will be impossible to know anything beyond this threshold. If we make an Artificial Intelligence (or AI), which in turn makes a greater AI, ad infinitum, then their futures are unknowable to us, just as our lives are unfathomable to a slug. So the singularity becomes, in a sense, a black hole, an impenetrable veil hiding our future from us.

Ray Kurzweil, a legendary inventor and computer scientist, seized on this metaphor and has applied it across a broad range of technological frontiers. He demonstrated that this kind of exponential acceleration isn’t unique to computer chips; it’s happening in most categories of innovation driven by information, in fields as diverse as genomics, telecommunications and commerce. The technium itself is accelerating its rate of change. Kurzweil found that if you make a very crude comparison between the processing power of neurons in human brains and the processing powers of transistors in computers, you could map out the point at which computer intelligence will exceed human intelligence, and thus predict when the crossover singularity would happen. Kurzweil calculates that the singularity will happen around 2040. Since that, relatively, seems like tomorrow, Kurzweil announced with great fanfare that the “Singularity is near.” In the meantime, everything is racing to a point that we cannot see or conceive beyond.

Though, by definition, we cannot know what will be on the other side of the singularity, Kurzweil and others believe that our human minds, at least, will become immortal because we’ll be able to download, migrate, or eternally repair them with our collective brainpower. Our minds (that is, ourselves) will continue on with or without our upgraded bodies. The singularity, then, becomes a portal or bridge to the future. All you have to do is live up to the singularity in 2040. If you make it, you’ll become immortal.

I’m not the first person to point out the many similarities between the singularity and the Rapture. The parallels are so close that some critics call the singularity “the spike” to hint at that decisive moment of fundamentalist Christian apocalypse. At the Rapture, when Jesus returns, all believers will suddenly be lifted out their ordinary lives and ushered directly into heavenly immortality without experiencing death. This singular event will produce repaired bodies, intact minds full of eternal wisdom, and is scheduled to happen “in the near future.” This hope is almost identical to the techno-Rapture of the singularity. It is worth trying to unravel the many assumptions built into the Kurzweilian version of singularity, because despite its oft-misleading nature, some aspects of the technological singularity do capture the dynamic of technological change.

First, immortality is in no way ensured by a singularity of AI, for any number of reasons: Our “selves” may not be very portable, engineered eternal bodies may not be very appealing, nor may super-intelligence alone be enough to solve the problem of overcoming bodily death.

Second, intelligence may or may not be infinitely expandable from our present point. Since we can imagine a manufactured intelligence greater than ours, we think that we possess enough intelligence to pull off this trick of bootstrapping, but in order to reach a singularity of ever-increasing AI, we have to be smart enough not only to create a greater intelligence, but also to make one that is able to move to the next level. A chimp is hundreds of times smarter than an ant, but the greater intelligence of a chimp is not smart enough to make a mind smarter than itself. In other words, not all intelligences are capable of bootstrapping intelligence. We might call a mind capable of imagining another type of intelligence but incapable of replicating itself a “Type 1” mind. Following this classification, a “Type 2” mind would be an intelligence capable of replicating itself (making artificial minds) but incapable of making one substantially smarter. A “Type 3” mind, then, would be capable of creating advanced enough intelligence to make another generation even smarter. We assume our human minds are Type 3, but it remains an assumption. It is possible that our minds are Type 1, or that greater intelligence may have to evolve slowly, rather than be bootstrapped to an instant singularity.

Third, the notion of a mathematical singularity is illusionary, as any chart of exponential growth will show. Like many of Kurzweil’s examples, an exponential can be plotted linearly so that the chart shows the growth taking off like a rocket. It can also be plotted, however, on a log-log graph, which has the exponential growth built into the graph’s axis. In this case, the “takeoff” is a perfectly straight line. Kurzweil’s website has scores of graphs, all showing straight-line exponential growth headed towards a singularity. But any log-log graph of a function will show a singularity at Time 0 — now. If something is growing exponentially, the point at which it will appear to rise to infinity will always be “just about now.”

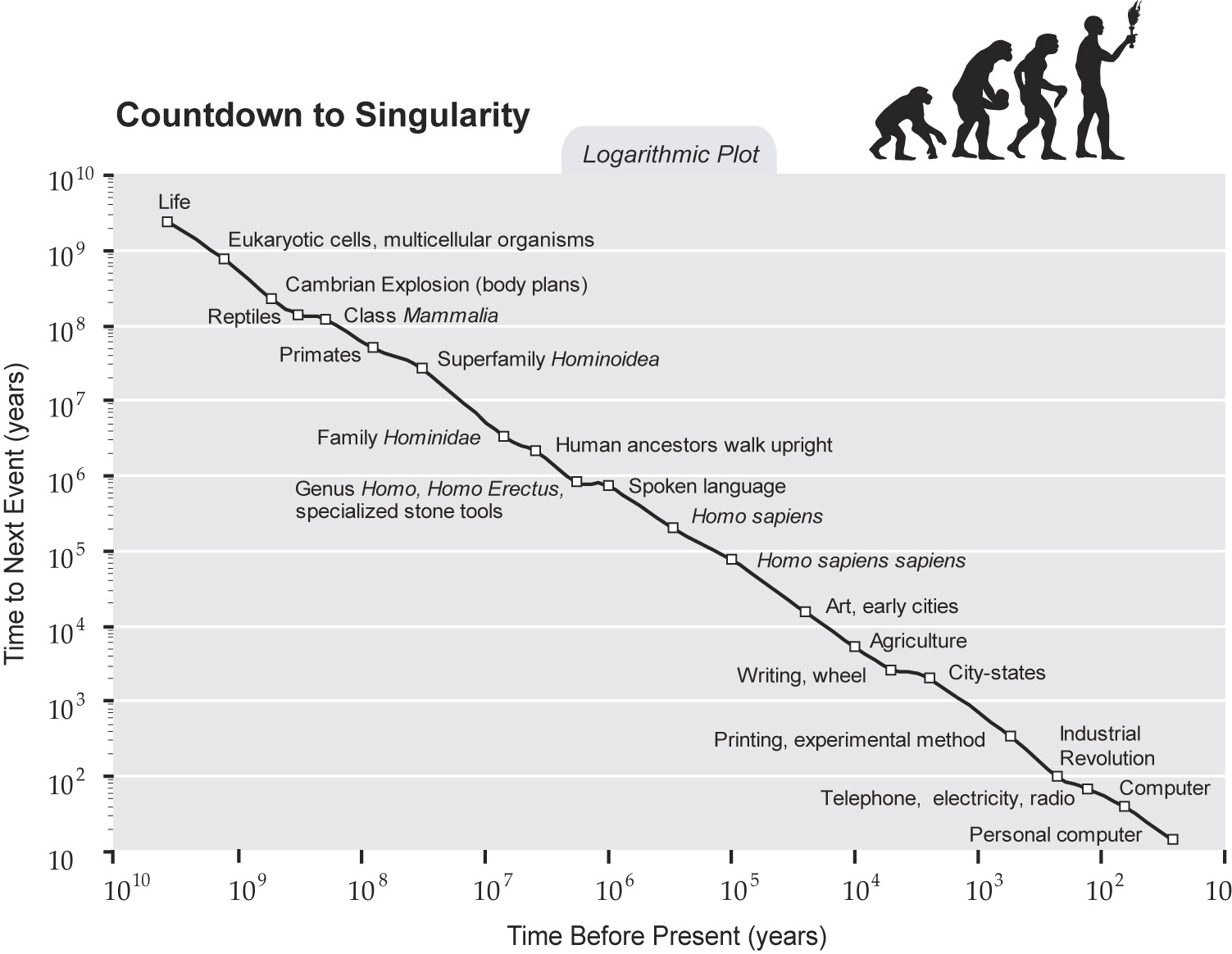

Look at Kurzweil’s chart of the exponential rate at which major events occur in the world, called “Countdown to Singularity.” Figure 1 It displays a beautiful laser straight rush across millions of years of history.

If you continue the curve to the present day, however, rather than cutting it off 30 years ago, it shows something strange. Kevin Drum, a fan and critic of Kurzweil who writes for the Washington Monthly, extended this chart to the present. Figure 2

Surprisingly, it suggests the singularity is now; more weirdly, it suggests that the view would have looked the same at almost any time along the curve. If Benjamin Franklin, an early Kurzweil type, had mapped out the same graph in the 1700s, his graph would have suggested that the singularity was happening right then, right now! The same would have happened at the invention of radio, or the appearance of cities, or at any point in history since — as the straight line indicates — the “curve,” or rate, is the same anywhere along the line.

Switching chart modes doesn’t help. If you define the singularity as the near-vertical asymptote you get when you plot an exponential progression on a linear chart, then you’ll get that infinite slope at any arbitrary end point along the exponential progression. That means that the singularity is “near” at any end point along the time line, as long as you are in exponential growth. The singularity is simply a phantom that materializes anytime you observe exponential acceleration retrospectively. These charts correctly demonstrate that exponential growth extends back to the beginning of the cosmos, meaning that for millions of years the singularity was just about to happen! In other words, the singularity is always “near,” has always been “near,” and will always be “near.”

We could broaden the definition of intelligence to include evolution, which is, after all, a type of learning. In this case, we could say that intelligence has been bootstrapping itself with smarter stuff all along, making itself smarter, ad infinitum. There is no discontinuity in this conception, nor any discrete points to map.

Fourth, and most importantly, technological transitions represented by the singularity are completely imperceptible from within the transition inaccurately represented by a singularity. A phase shift from one level to the next is only visible from the perch of the new level — after arrival there. Compared to a neuron, the mind is a singularity. It is invisible and unimaginable to the lower, inferior parts. From the perspective of a neuron, the movement from a few neurons, to many neurons, to an alert mind, seems a slow, continuous journey of gathering neurons. There is no sense of disruption — of Rapture. This discontinuity can only be seen in retrospect.

Language is a singularity of sorts, as is writing. Nevertheless, the path to both of these was continuous and imperceptible to the acquirers. I am reminded of a great story that a friend tells of cavemen sitting around the campfire 100,000 years ago, chewing on the last bits of meat, chatting in guttural sounds. Their conversation goes something like this:

— “Hey, you guys, we are TALKING!”

— “What do you mean �TALKING?’ Are you finished with that bone?”

— “I mean, we are SPEAKING to each other! Using WORDS. Don’t you get it?”

— “You’ve been drinking that grape stuff again, haven’t you?”

— “See, we are doing it right now!”

— “Doing what?”

As the next level of organization kicks in, the current level is incapable of perceiving the new level, because that perception must take place retrospectively. The coming phase shift is real, but it will be imperceptible during the transition. Sure, things will speed up, but that will only hide the real change, which is a change in the structural rules of the game. We can expect in the next hundred years that life will appear to be ordinary and not discontinuous, certainly not cataclysmic. All the while, something new will be gathering, and we’ll slowly recognize that we’ve acquired the tools to perceive new tools present — and we’ll have had them for some time.

When I mentioned this to emerging digital technology specialist and digerati Esther Dyson, she reminded me that we have an experience close to the singularity every day: “It’s called waking up. Looking backwards, you can understand what happens, but in your dreams you are unaware that you could become awake.”

In a thousand years from now, all the 11-dimensional charts of the time will show that “the singularity is near.” Immortal beings, global consciousness and everything else we hope for in the future may be real, but a linear-log curve in 30076 will show that a singularity approaches. The singularity is not a discrete event. It’s a continuum woven into the very warp of extropic systems. It is a traveling mirage that moves along with us, as life and the technium accelerate their evolution.

Ray Kurzweil writes in response:

Allow me to clarify the metaphor implied by the term “singularity.” The metaphor implicit in the term “singularity” as applied to future human history is not to a point of infinity, but rather to the event horizon surrounding a black hole. Densities are not infinite at the event horizon but merely large enough that it is difficult to see past them from outside.

I say “difficult” rather than impossible, because the Hawking radiation emitted from the event horizon is likely to be quantum entangled with events inside the black hole, so there may be ways of retrieving the information. This was the concession made recently by Stephen Hawking. However, without getting into the details of this controversy, it is fair to say that seeing past the event horizon is difficult — impossible from the perspective of classical physics — because the gravity of the black hole is strong enough to prevent classical information from inside the black hole from escaping.

We can, however, use our intelligence to infer what life is like inside the event horizon, even though seeing past the event horizon is effectively blocked. Similarly, we can use our intelligence to make meaningful statements about the world after the historical singularity, but seeing past this event horizon is difficult because of the profound transformation that it represents.

Therefore, discussions of infinity are irrelevant. You are correct in your statement that exponential growth is smooth and continuous. From a mathematical perspective, an exponential looks the same everywhere and this applies to the exponential growth of the power (as expressed in price-performance, capacity, bandwidth, etc.) of information technologies. However, despite being smooth and continuous, exponential growth is nonetheless explosive once the curve reaches transformative levels. Consider the Internet. When the ARPANET went from 10,000 nodes to 20,000 in one year, and then to 40,000 and then 80,000, it was of interest only to a few thousand scientists. When ten years later it went from 10 million nodes to 20 million, and then 40 million and 80 million, the appearance of this curve looked identical (especially when viewed on a log-log plot), but the consequences were profoundly more transformative. There is a point in the smooth exponential growth of these different aspects of information technology when they transform the world as we know it.

You cite the extension made by Kevin Drum of the log-log plot that I provide of key paradigm shifts in biological and technological evolution (which appears on page 17 of Singularity is Near). This extension is utterly invalid. You cannot extend in this way a log-log plot for just the reasons you cite. The only straight line that is valid to extend on a log plot is a straight line representing exponential growth when the time axis is on a linear scale and the value (such as price-performance) is on a log scale. In this case, you can extend the progression, but even here you have to make sure that the paradigms to support this ongoing exponential progression are available and will not saturate. That is why I discuss at length the paradigms that will support ongoing exponential growth of both hardware and software capabilities. It is not valid to extend the straight line when the time axis is on a log scale. The only point of these graphs is that there has been acceleration in paradigm shift in biological and technological evolution. If you want to extend this type of progression, then you need to put time on a linear x-axis and the number of years (for the paradigm shift or for adoption) as a log value on the y-axis. Then it may be valid to extend the chart. I have a chart like this on page 50 of my book.

This acceleration is a key point. These charts show that technological evolution emerges smoothly from the biological evolution that created the technology-creating species. You mention that an evolutionary process can create greater complexity — and greater intelligence — than existed prior to the process. And it is precisely that intelligence-creating process that will go into hyper drive once we can master, understand, model, simulate, and extend the methods of human intelligence through reverse-engineering it and applying these methods to computational substrates of exponentially expanding capability.

That chimps are just below the threshold needed to understand their own intelligence is a result of the fact that they do not have the prerequisites to create technology. There were only a few small genetic changes, comprising a few tens of thousands of bytes of information, which distinguish us from our primate ancestors: a bigger skull, a larger cerebral cortex, and a workable opposable appendage. There were a few other changes that other primates share to some extent, such as mirror neurons and spindle cells.

As I pointed out in my Long Now talk, a chimp’s hand looks similar to a human’s, but the pivot point of the thumb does not allow facile manipulation of the environment. In contrast, our human ability to look inside the human brain and model, simulate and recreate the processes we encounter has already been demonstrated. The scale and resolution of these simulations will continue to expand exponentially. I make the case that we will reverse-engineer the principles of operation of the several hundred information processing regions of the human brain within about twenty years and then apply these principles (along with the extensive tool kit we are creating through other means in the AI field) to computers that will be many times (by the 2040s, billions of times) more powerful than needed to simulate the human brain.

You write, “Kurzweil found that if you make a very crude comparison between the processing power of neurons in human brains and the processing powers of transistors in computers, you could map out the point at which computer intelligence will exceed human intelligence.” That is an oversimplification of my analysis. I provide in my book four different approaches to estimating the amount of computation required to simulate all regions of the human brain based on actual functional recreations of brain regions. These all come up with answers in the same range, from 1014 to 1016 cps for creating a functional recreation of all regions of the human brain, so I’ve used 10^16 cps as a conservative estimate.

This refers only to the hardware requirement. As noted above, I have an extensive analysis of the software requirements. While reverse-engineering the human brain is not the only source of intelligent algorithms (and, in fact, has not been a major source at all until recently, as we didn’t have scanners that could see into the human brain with sufficient resolution), however, my analysis of reverse-engineering the human brain is along the lines of an existence proof that we will have the software methods underlying human intelligence within a couple of decades.

Another important point in this analysis is that the complexity of the design of the human brain is about a billion times simpler than the actual complexity we find in the brain. This is due to the brain (like all biology) being a probabilistic recursively expanded fractal. This discussion goes beyond what I can write here, although it is in the book. We can ascertain the complexity of the human brain’s design, because the design is contained in the genome. I show that the genome (including non-coding regions) only has about 30 to 100 million bytes of compressed information in it due to the massive redundancies in the genome.

In summary, I agree that the singularity is not a discrete event. A single point of infinite growth or capability is not the metaphor being applied. Yes, the exponential growth of all facts of information technology is smooth, but it is nonetheless explosive and transformative.

|

Where there is the necessary technical skill to move mountains, there is no need for the faith that moves mountains -anon-

|

|

|

Fritz

Adept

Gender:

Posts: 1746

Reputation: 7.64

Rate Fritz

|

|

Re:Singularity sighted.

« Reply #66 on: 2015-08-19 11:35:15 » |

|

Power and Control; you'd wonder if as we merge into technology the Darwinian Gene based prime directives buffered with social Memes should alter into something else, but what would be the Temes that govern trans-human behaviour and would they find it in their best interest; to continue down the 'us and them' or would a 'cooperative' synergy like a hive entity be more advantages.

Cheers

Fritz

What if one Country Achieves the Singularity First?

Source: Motherboard

Author:Zoltan Istvan

Date: 2015.04.21

Zoltan Istvan is a futurist, author of The Transhumanist Wager, and founder of and presidential candidate for the Transhumanist Party. He writes an occasional column for Motherboard in which he ruminates on the future beyond natural human ability.

The concept of a technological singu​larity is tough to wrap your mind around. Even experts have differing definitions. Vernor Vinge, responsible for spreading the idea in the 1990s, believes it's a moment when growing superintelligence renders our human models of understanding obsolete. Google's Ray Kurzweil says it's "a future period during which the pace of technological change will be so rapid, its impact so deep, that human life will be irreversibly transformed." Kevin Kelly, founding editor of Wired, says, "Singularity is the point at which all the change in the last million years will be superseded by the change in the next five minutes." Even Christian theologians have chimed in, sometimes referring to it as "the rapture of the nerds."

My own definition of the singularity is: the point where a fully functioning human mind radically and exponentially increases its intelligence and possibilities via physically merging with technology.

All these definitions share one basic premise—that technology will speed up the acceleration of intelligence to a point when biological human understanding simply isn’t enough to comprehend what’s happening anymore.

That also makes a technological singularity something quasi-spiritual, since anything beyond understanding evokes mystery. It’s worth noting that even most naysayers and luddites who disdain the singularity concept don't doubt that the human race is heading towards it.

In March 2015, I published a Motherboard article titled A Global Arms Race to Create a Superintelligent AI is Looming. The article argued a concept I call the AI Imperative, which says that nations should do all they can to develop artificial intelligence, because whichever country produces an AI first will likely end up ruling the world indefinitely, since that AI will be able to control all other technologies and their development on the planet.

The article generated many thoughtful comments on Red​dit Futurology, Less​Wrong, and elsewhere. I tend not to comment on my own articles in an effort to stay out of the way, but I do always carefully read comment sections. One thing the message boards on this story made me think about was the possibility of a "nationalistic" singularity—what might also be called an exclusive, or private singularity.